I was lucky enough to spend last week with a loaner pair of Google Glass. Purchased by my place of work, I was asked to try them out and evaluate them for possible library use or development of apps by the library. I’m far from the first person to write about their experience ewith Glass, but I wanted to write up my experience and reactions as an exercise in forcing myself to think critically about the technology. I’m splitting it into two posts: One about the impact and uses of Glass in libraries, and a second about my more general impressions as a Glass user and my overall daily life.

Without further ado, lets look at the library perspective: I came away with one major area for library Glass development in mind, plus a couple of minor (but still important) ones.

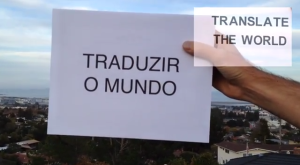

One big area for library development on Google Glass: Textual capture and analysis

Image from AllThingsD

One of the most impressive apps I tried with Glass, and one of only a handful of times I was truly amazed by it’s capabilities, was a translation app called Word Lens. Word Lens gives you a realtime view of any printed text in front of you, translated into a language of your choice. In practice I found the translation’s accuracy to be lacking, but the fact that this works at all is amazing. It even attempts to replicate the font and placement of the text, giving you a true augmented view and not just raw text. Word Lens admittedly burned through Glass’ battery in less than half an hour and made the hardware almost too hot to touch, but imagine this technology rolled forward into a second or third generation product! While similar functionality is available in smartphone apps today (this is a repeating refrain about using Glass that I’ll come back to in my next post), translation, archiving, and other manipulation of text in this kind of ambient manner via Glass makes it many times more useful than a smartphone counterpart. Instead of having to choose to point a phone at one sign, street signs and maps could be automatically translated as you wander a foreign city or sit with research material in another language.

I want to see this taken further. Auto-save the captured text into my Evernote account and while you’re at it, save a copy of every word I look at all day. Or all the way through my research process. Make that searchable, even the pages I just flipped past because I thought they didn’t look valuable at the time. Dump all that into a text-mining program and save every image I’ve looked at for future use in an art project. I admit I drool a little bit over the prospect of such a tool existing. Again, a smartphone could do all of this too. But using Glass instead frees up both of my hands and lets the capture happen in a way that doesn’t interfere with the research itself. The possibilities here for digital humanities work seem endless, and I hope explorations of the space include library-sponsored efforts.

Other areas for library development on Google Glass:

Tours and special collections highlights

The University of Virginia has already done some work in this area. While wandering campus with their app installed, Glass alerts you when you’re close to a location referenced in their archival photo collections and shows you the old image of your current location. This is neat, and especially while Glass is on the new side will likely get your library some press. NC State’s libraries have done great work with their Wolfwalk mobile device tour, for example, which seems like a natural product to port over to Glass. This is probably also the most straightforward kind of Glass app for a library or campus to implement. Google’s own Field Trip Glass and smartphone app already points out locations of historical or other interest to you as you walk around town. The concept is proven, works, and is set for exploitation.

Wayfinding within the library

While it would likely require some significant infrastructure and data cleanup, I would love to see a Glass app that directs a library user to a book on the shelf or the location of their reserved study room or consultation appointment. I imagine arrows appearing to direct someone left, right, straight, or even to crouch down to the lower shelf. While the tour idea is in some ways a passive app, wayfinding would be more active and possibly more engaging.

Wrap-up

The secondary use cases above are low-hanging fruit, and I expect libraries to jump onboard with them quickly. Again, UVA has already forged a path for at least one of them. And I fully expect generic commercial solutions to emerge to handle these kinds of functions in a plug and play style.

Textual capture and analysis is a tougher nut to crack. I know I don’t have the coding chops to make it happen, and even if I started to learn today I wouldn’t pick it up in time before someone else gets there. Because someone will do this. Evernote, maybe, or some other company ready to burst onto the scene. But what if a library struck first? Or even someone like JSTOR or Hathi Trust? I’m not skilled enough to do it, but I know there’s people out there in libraryland (and related circles) who are. I want to help our users better manage their research, to take it further than something like Zotero or the current complicated state of running a sophisticated text mining operation. The barriers to entry on this kind of thing is still high, even as we struggle to lower it. Ambient information gathering as enabled by wearable technology like Glass has the potential to help researchers over the wall.

Tomorrow I’ll write up my more general, less library oriented impressions of using Glass.