“I’m the Emerging Technologies Librarian at UNC.”

“So what does that mean?”

Every time I meet someone new at work, that’s how the conversation goes.

My response usually consists of arm flailing and a disjointed summary of my duties. I’m working on that. But I think people mostly don’t know what my job defines as an “emerging technology”.

To be honest, as the years go by I’m less a fan of that term. “Emerging” is too broad. Any new technology emerges, just by virtue of being new. Solar power is an emerging technology, and even something as simple as seatbelts once was too. I can’t keep an eye on everything. Instead, I find myself looking at a new technology and asking: Is it disruptive to libraries? “Disruptive” does a better job of defining what I deal with on a day to day basis. The technologies I look at tend to be new and emerging, but as they emerge they also disrupt that context and the way we do things.

I tend to define things by removing what they’re aren’t, plus there’s a lot more tech that doesn’t disrupt libraries than that which does. Xbox Kinect is interesting and definitely emerging, but I don’t see a lot of immediate disruption coming from it in my academic library corner of the world. I also don’t see a lot of relevance for 3D printers in the core parts of my particular work environment, but they’re definitely emerging as technology. As sci-fi author Neal Stephenson recently noted in Arc 1.3, “…[3D printing] isn’t a disruptive idea on its own. It becomes disruptive when people find their own uses for it.” Â It’s when an actual or likely use impacts libraries that I pay more attention.

So now I have to define what makes a technology disruptive for my purposes. My definition is a bit hard to nail down, but I think I’ve settled on something close to “a technology that could change the way academic libraries deliver services and information.”

Based on that, eBooks are an obvious disruptive technology in libraries. And in a general sense the web continues to disrupt everything in our core mission.

Now I’ve established criteria for which disruptive technologies I deal with in my job. But how do I spot disruptive technologies for evaluation in the first place? Disruptive technology arrives in two different flavors. The first kind does something new and interesting well, but misses a basic feature of an existing technology. The second kind creates an entirely new niche for itself, carving out existence without an obvious analogue anywhere else.

TYPE ONE

Google Voice is a prime example of the first kind of disruptive tech. It adds a number of very useful features to our venerable old phone numbers, but also doesn’t support MMS messaging or certain types of SMS shortcodes at all. I don’t use either of those features on my phone often, but it’s enough that I’d miss them if I moved over to Google Voice.

Later, the disruptive tech might fill in those gaps and be more fully emerged as a replacement. But I have real trouble coming up with examples of tech that successfully made this transition. Google Voice is still plugging right along, but shows no signs of fixing my dealbreakers. Other examples have been less fortunate; their feature gaps were important enough that they eventually faded away. Netbooks took off on their amazing portability and battery life, but their tiny keyboards and often limited processing power meant they peaked early and are now fading. Google Wave tried to reinvent email with a treasure trove of added features, but had an impenetrable UI and lacked a clear use case. It lasted 15 months. Uber’s car service is heavily disrupting the taxi industry, but is so far outside the box that it’s meeting significant legal pushback and sabotage there. Look at 3D printers again: they provide all kinds of disruptive challenges to traditional manufacturing. But the technology is also extremely fiddly and requires a lot of customization, expertise and constant adjustment to use. It’s future will depend on whether the printers can overcome those gaps and more fully emerge into everyday use.

In the academic library world, this first type of disruptive technology describes ebooks perfectly. They add new functionality to the traditional task of consuming text, but thanks to DRM and licensing we can’t share them as easily and have questions about long-term viability of the titles in our collection. Ebook readers fit too, for similar reasons. I’m obviously keeping a close eye on them and am involved with a number of ebook-related projects and programs on campus. The recent trend of massively online courses like Udacity and Coursera qualifies as this type of disruption as well, though for higher ed in general. Instant messaging continues to disrupt the way we provide service at the reference desk. Â So those are three areas I’m focusing on lately.

TYPE TWO

Not all emerging technologies fit that first model. Instead of changing something we already have, the disruption a technology creates may carve out a whole new space for itself. The iPad is the obvious example here; Apple pretty much created the modern tablet market. But despite being a new market, tablets still disrupt laptops, ebook readers and smartphones. Cell phones in a general sense fit this second model of disruption too, incidentally. I have a harder time coming up with more examples here, especially ones relevant to academic libraries. Most of our disruptions come from modifications to existing technologies or systems, and very few spring forth into an entirely new niche. Still, iPads and other tablets have huge implications for desktop computing facilities in my library and on my campus. Even if the disruption isn’t obvious, it’s still important to recognize the difference in how it comes about. Libraries need to keep an eye on changes to both current niches and the emergence of entirely new ones.

PHASES OF DISRUPTION

No matter which type of disruption a technology fits, all of them go through early, middle and late phases of disruption. Early on, they’re pretty experimental with notable feature gaps. Google Wallet and their system of NFC payments fits the early bill right now. I think Google Voice seems to be stuck in this early phase too, and shows no indication of advancing beyond it. Before the release of the Kindle I’d also have put ebooks at this point. They were a niche interest at best.

By the middle phase, a technology has a foothold in the general public – not just among early adopters. In April we learned that 21% of American adults read an ebook last year, and 45% now own a smartphone. They’re not anywhere near universal adoption yet, but it’s significant and trending upward.

Eventually some of these technologies close in on finishing their disruption. By that point they’re into the late phase. I classify MP3s as a late phase disruption, for example. In many demographics they’ve completely replaced CDs, the technology they disrupted. Of course CDs, vinyl, and other music distribution methods do still exist. Not everyone has the technical literacy to make the change in their personal music collection, though an increasing majority do.

After the final stage of disruption, that ’emerged’ term pops up again. Emerging technologies go through phases of disruption, but ultimately must become fully emerged or at some point fade away. Blogs disrupted traditional web publishing (if there can be said to be such a thing), but are now a fact of online life. They’re emerged. Digital cameras and (non-smartphone) cell phones are emerged too.

FULL CIRCLE

We’ve come back around to dealing with emerging technologies. But on a day to day basis, I’m more concerned with following their progress through phases of disruption. If we can figure out which technologies with potential implications for libraries will make it through the phases, we can get ahead of the game. Or at least keep pace and stop anything from blowing up in our faces.

And that’s why I flail my arms when someone asks me what my job title means: I haven’t found a way to distill all this into a soundbyte yet. But as a collective institution, libraries are ripe for disruption. In my job I try to keep a practical focus on the horizon and do my part to keep us a bit ahead of the curve.

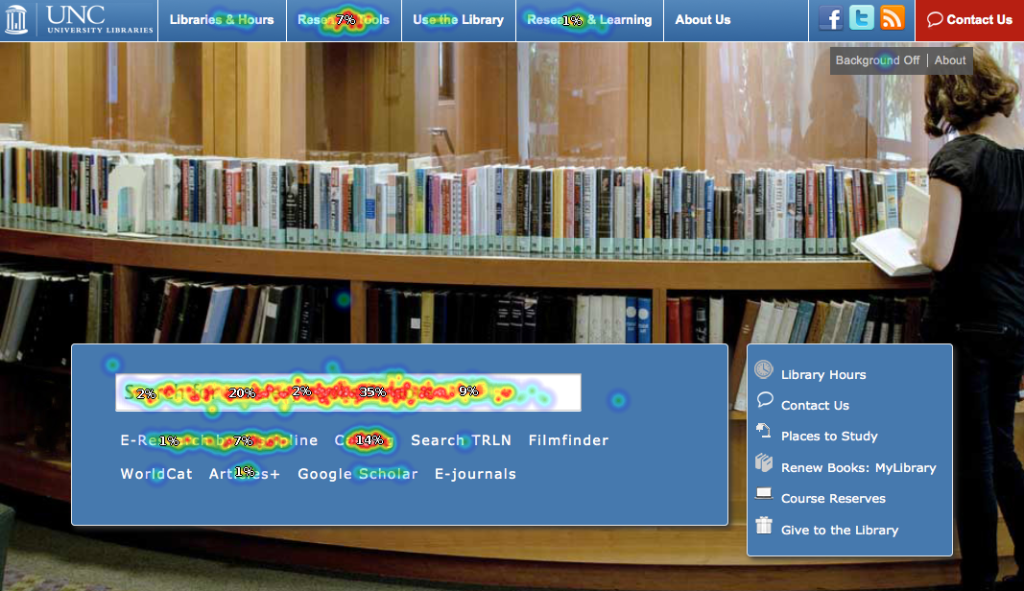

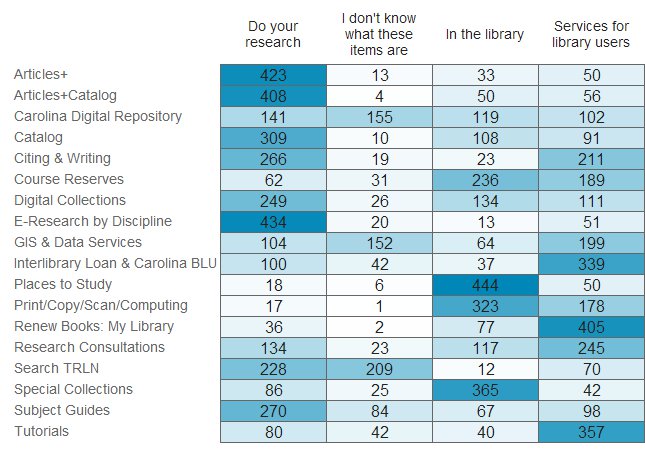

Usability testing is one of the best parts of my job. I love hearing from users about how they interact with the library’s website and then figuring out what we can change to better meet their needs.

Usability testing is one of the best parts of my job. I love hearing from users about how they interact with the library’s website and then figuring out what we can change to better meet their needs.